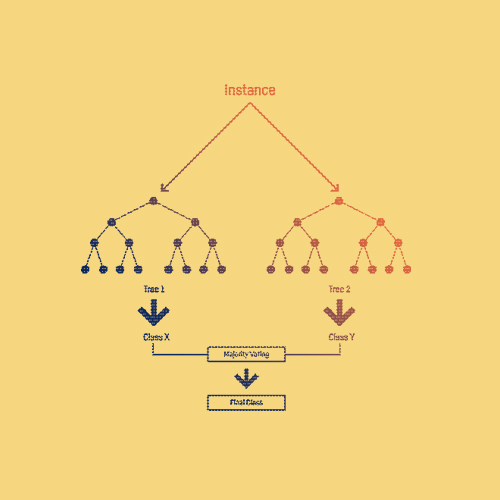

At the heart of statistics, data mining, and machine learning (ML), decision trees are among the most widely used ML algorithms. This popularity derives from their intelligibility, simplicity, and excellent results, especially when stacked as random forests.

Decision trees are supervised ML algorithms that can be used for both classification and regression problems. They are essentially a series of sequential decisions made to reach a specific result.

Their origins are somewhat difficult to pinpoint. Many people trace their locus back to Ronald Fisher‘s paper on discriminant analysis (1936). The AID project by Morgan and Sonquist and the 1966 publication by Hunt also played important roles. In psychology, decision tree methods were used to model the human concept of learning. Along the way, researchers discovered the algorithm was useful for programming. In 1972, the first classification tree appeared in the THAID project by Messenger and Mandell. Berkeley Statistics professors Leo Breiman and Charles Joel Stone, along with Jerome H. Friedman and Richard Olshen from Stanford University, then began developing the classification and regression tree (CART) algorithm. This was unveiled in 1977.

“The one thing I’ve learnt in my consulting, is that you have to get to know your data.”

Leo Breiman

Innovations and Improvements

1984 saw the first official publication with a CART software — a revolution in the world of algorithms. Even today, CART is one of the most used methods for data analysis. Upgrades have included truncating unnecessary trees, tunneling, and choosing the best tree version. Then computer science researcher John Ross Quinlan came up with a new concept: trees with multiple answers. He invented ID3 (Iterative Dichotomiser 3), which he continued to upgrade until C4.5 was born. It was ranked No. 1 in the Top 10 Algorithms in Data Mining at the IEEE ICDM Conference 2006.

The next step? Random forests, a tree-based ML algorithm leveraging the power of multiple decision trees. The first such algorithm was created in 1995 by Tin Kam Ho, while leading the Statistics and Learning Research Department at Bell Laboratories. Her work was then extended by Leo Breiman and Adele Cutler.

Today, decision trees and random forests still drive many data mining software programs.

Key Dates

-

1936

Discriminant Analysis

British mathematician and statistician Sir Ronald Aylmer Fisher introduces the Iris Flower Data Set as an example of discriminant analysis. This set the foundations for the decision tree.

-

1972

The First Classification Tree

Messenger and Mandell develop Theta Automatic Interaction Detection (THAID), giving us the first classification tree.

-

2006

Random Forests

Leo Breiman and Adele Cutler extend Tin Kam Ho’s algorithm and register "Random Forests" as a trademark. As of 2019, it is owned by Minitab, Inc.